I am running The Augmented Software-Engineer (ASE) Meetup in Dublin. The other day we we had the first one for this year (Note: Fantastic event. Check it out here).

A year ago we had 50 members. Today we have ~750 members on the meetup-group. We are (all) learning a lot. Tools, Best Practices, Workflows, …

And some of the presentation are obviously “success-stories”. Mainly how much time you can save with using AI. In the best case you can for instance generate a complete Proof-of-Concepts (POC) or Minimal-Viable-Products (MVP) from scratch in less than a week (maybe down from a month), which sends a message along the lines of: AI makes Software-Engineers 400% faster/more productive!

Then you talk to CTOs that run large(r) software-engineering organisations (~100 engineers) and the story looks/sounds different. Yes, there are productivity gains, but some of these gains then get eaten again by additional effort to check/validate/correct some of the things the AI has produced/generated. And also the bigger the organisation the smaller the amount of actual coding time per engineer (because the amount of time the engineers needs to spend to coordinate work with the rest of the organisation increases). And god forbid you are not doing greenfield development from scratch, but maintain a large software-system that is 10 years old with 100 million users, because in that case generating something that is roughly right is not what the customers/users want (anymore). They want a specific fix/feature-enhancement to a specific problem without breaking stuff some place else or introducing new problems.

Means (right now) I can observe this weird disconnect between the experience of the individual, were for certain tasks (that you can do on your own) you experience these blibs of hyper-productivity (especially in smaller start-up organisations with less than 10 engineers). On the other side you have software-engineering organisations who can barely break-even on the ROI (they (maybe) see productivity gains of 5-20%) and then (still) have to pay for all the tokens that got spend to materialize the (meager) ROI.

This does not sound so good! What the hell is going on?

First of all: Don’t panic and don’t forget your towel!

I think the root-cause here is, that we are still learning how to leverage AI for the craft of software-engineering. In 2025 the main focus was on how to level up individuals/engineers. I think for 2026 this focus will (and has to) change to leveling up software-engineering organisations.

And this will be challenging, because right now 50% of the organisations just equip the individuals with AI and hope/think that they can get the gains everybody is looking for. I think this will not work.

I think 2026 needs to be(come) the year of The Augmented Software-Organisation (ASO). An ASO understands that AI is not an evolution, but a revolution. Means we need to rethink and reimagine how the software-engineering process and the software-engineering organisation looks like. We need to add steps that are needed and remove steps that are not needed anymore. More importantly we need to remove roles that we do not need anymore and need to add roles that are (urgently) needed now.

Here are a couple of thoughts …

The disconnect between individual hyper-productivity and organizational mediocrity has a clear explanation: Hype measures local speed improvements, while reality measures system throughput.

AI dramatically accelerates certain micro-tasks:

- Boilerplate code generation

- Test scaffolding

- Mechanical refactoring

- API exploration

- Documentation drafting

But real engineering productivity in an organization includes:

- Coordination across teams

- Code review processes

- Verification and testing

- Decision-making at multiple levels

- Risk management

These systemic bottlenecks cap overall measured gains. You can write code five ties faster, but if code review, deployment pipelines, and cross-team coordination haven’t changed, the system still moves at the old pace.

And not all work benefits equally from AI:

Near-Automation Work (where we should see dramatic gains):

- CRUD and glue code

- Test generation

- Mechanical refactors

- Documentation

Human-Gated Acceleration (where we need workflow changes):

- Feature scaffolding

- Debugging assistance

- Code review support

Minimally Affected Areas (where humans remain essential):

- Product prioritization

- Cross-team coordination

- Risk decisions

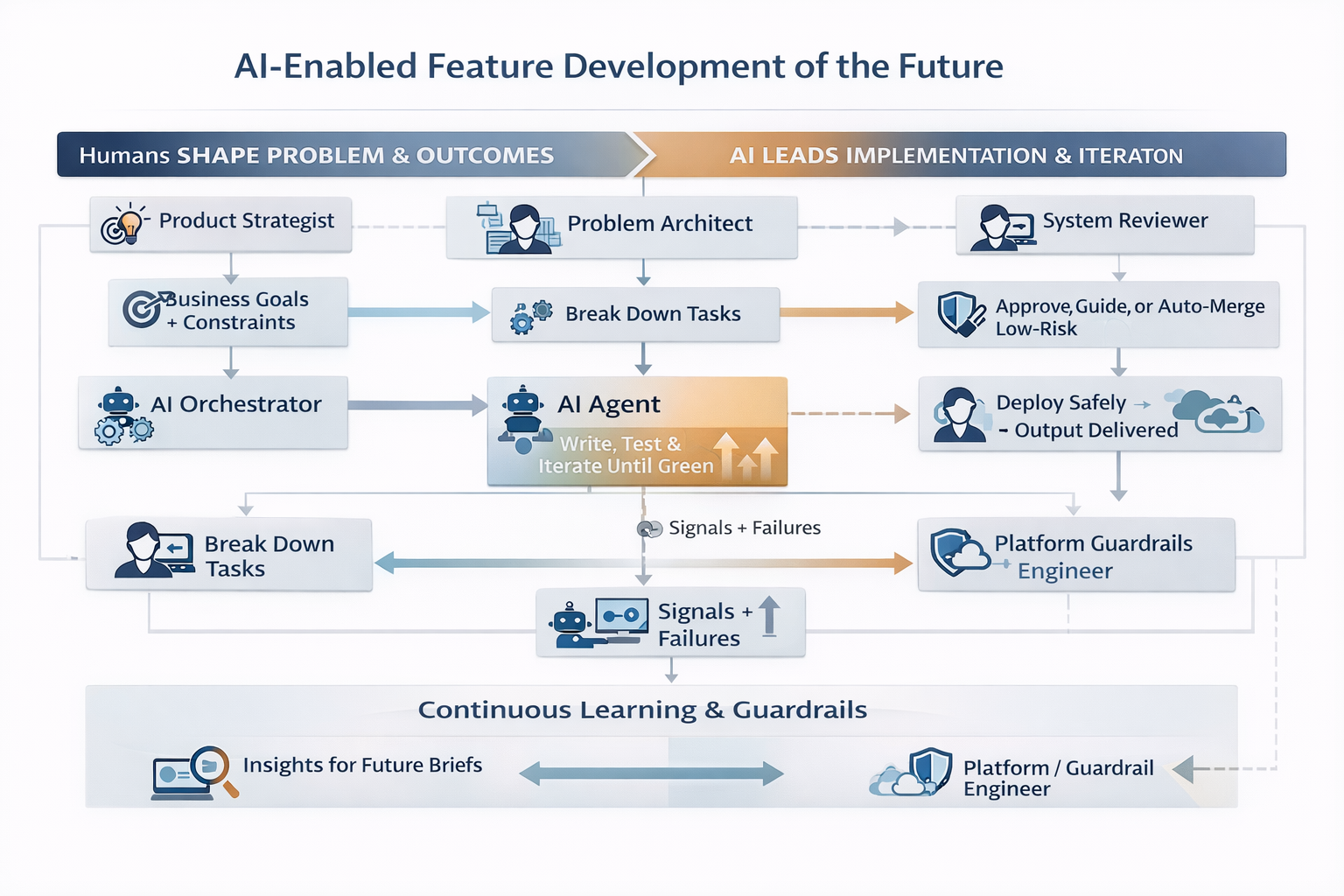

That means to unlock organizational gains, we need to redesign the entire delivery pipeline:

Stage 0 - Problem Intake: Humans define goals, constraints, and invariants

Stage 1 - Solution Framing: AI proposes approaches; humans select constraints

Stage 2 - Task Decomposition: AI generates executable task graphs

Stage 3 - Implementation: AI writes code and tests, iterates until green

Stage 4 - Tiered Review: Auto-merge for low-risk changes, human oversight based on risk classification

Stage 5 - Release & Feedback: AI monitors signals and proposes optimizations

The key insight: Most organizations are still using 2020 workflows with 2026 tools.

Here is an illustration how a better workflow/process can look like …

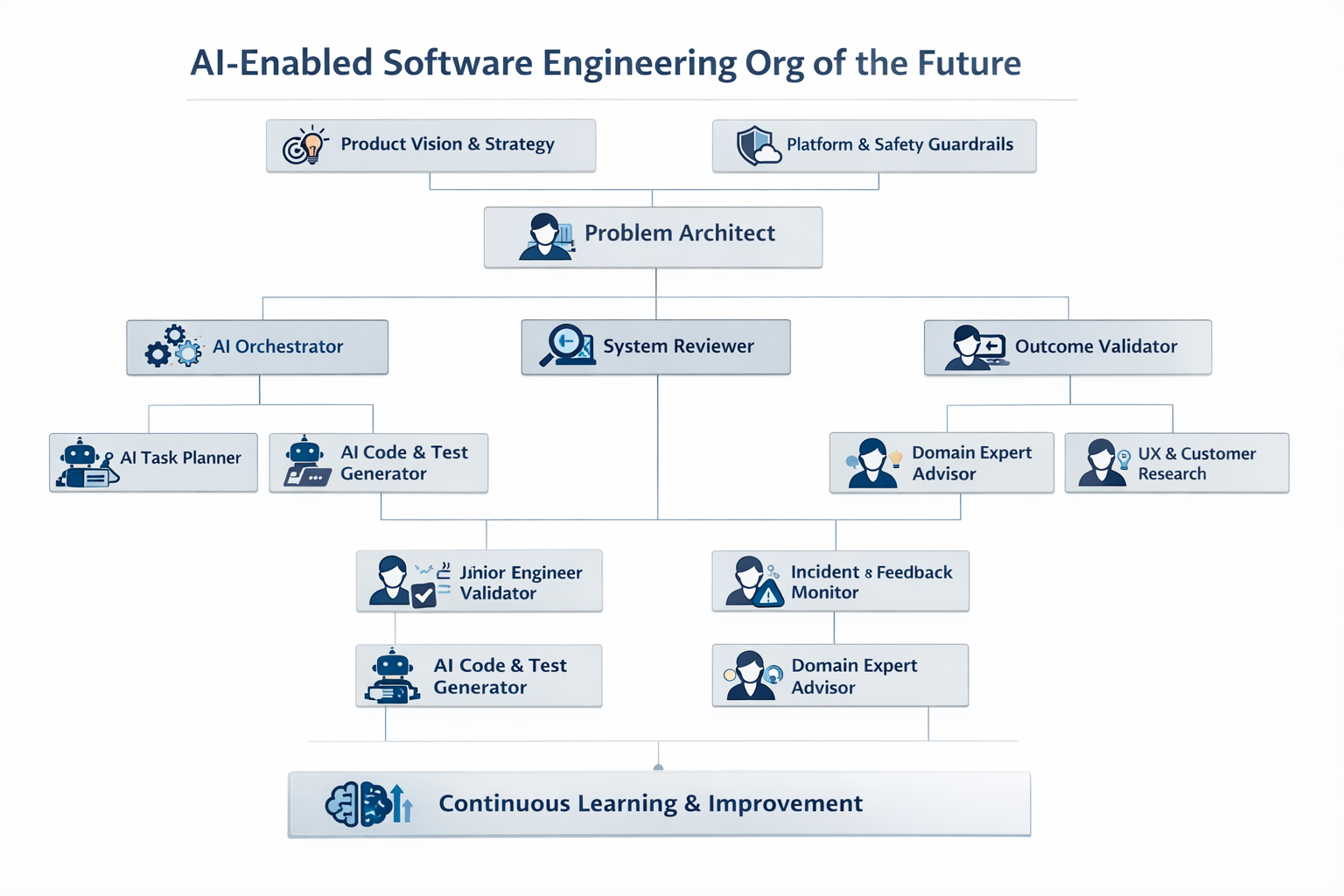

The ASO also needs new high-leverage roles:

Problem Architect - Defines constraints and invariants, not implementations

AI Orchestrator - Manages agent workflows and trust rules

System Reviewer - Reviews intent and correctness, not syntax

Platform Guardrail Engineer - Ensures safe deployment and rollback capabilities

Senior engineers should shift from implementation volume to system judgment. The question changes from “Can you write this feature?” to “Should this feature exist, and what could go wrong?”

Here is an illustration how the new roles and responsibilities can look like …

Executives need a new mental model. Here’s the reframe: AI might make engineers five times faster at certain tasks, but that is not what we want. What we want is an organizations that doubles its output/throughput. Means we are looking for organisations that are willing to delete work rather than accelerate it.

The highest leverage comes from:

- Redefining work units (what requires human judgment vs AI execution)

- Establishing new trust boundaries (what can auto-merge vs what needs review)

- Redesigning human roles (upstream problem shaping, downstream risk judgment)

The limiting factor is no longer coding speed. It is organizational design.

What does this mean for 2026?

Organizations that win will:

- Redesign workflows around AI-as-executor, not AI-as-assistant

- Establish auto-merge lanes for low-risk, well-tested changes

- Move engineers upstream to problem definition and constraint specification

- Build guardrails that enable speed without sacrificing safety

- Measure throughput, not just coding speed

The goal is to double organizational throughput by eliminating bottlenecks that AI has made obsolete.

This is uncomfortable work. It requires killing processes people have built careers around. It requires trusting AI in ways that feel premature. It requires admitting that some coordination overhead was always wasteful, and AI is just making that waste visible.

But the organizations that figure this out will have an insurmountable advantage. Because while everyone else is debating whether AI makes engineers 20% or 30% faster, ASOs will be shipping double the features with the same headcount.

The race is on.